k8s, vmware, ansible, metalLB, prometheus, grafana, alertmanager, metrics-server, harbor, clair, notary

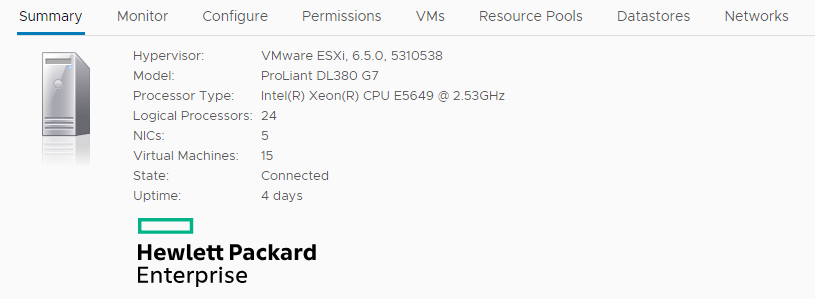

If you’re short on resources in your home lab and simply can’t deploy an OpenStack private cloud to play around with, this tutorial will walk you through setting up a highly-available k8s cluster on VMware ESX vms. The principles used to deploy them very much resemble those used to deploy OpenStack instances so you’ll be able to quickly and effectively walk through the very first step in this tutorial.

The k8s cluster we’ll be deploying will have 3x master and 3x worker nodes and will use metalLB for bare-metal load balancing, prometheus, grafana and alertmanager for monitoring, metrics and reporting and harbor with clair and notary for image repository, signing and scanning.

We will be:

- checking out and customising ansible-deploy-vmware-vm

- creating vms from templates

- setting up:

- the k8s cluster via kubespray

- metalLB

- prometheus, grafana and alertmanager

- metrics-server

- harbor

- notary and clair

What we won’t be doing is:

INFO: the VMware templates were made after an Ubuntu 18.04 vm.

STEP 1 — deploy multiple VMWare virtual machines from a template

Deploy the vm’s via Ansible. First and foremost, checkout ansible-deploy-vmware-vm and cd to it.

git clone https://github.com/cloudmaniac/ansible-deploy-vmware-vm.git

cd ansible-deploy-vmware-vmSecond, we need to tell ansible how to connect to our VMware ESX cluster. Edit or create the answerfile.yml and fill in the self explanatory blanks:

Infrastructure

# - Defines the vCenter / vSphere environment

deploy_vsphere_host: '<vsphere_ip>'

deploy_vsphere_user: '<username>'

deploy_vsphere_password: '<password>'

deploy_vsphere_datacenter: '<datacenter>'

deploy_vsphere_folder: ''

esxi_hostname: '<esx_hostname>'

# Guest

# - Describes virtual machine common options

guest_network: '<network_name>'

guest_netmask: '<netmask>'

guest_gateway: '<gw>'

guest_dns_server: '<dns>'

guest_domain_name: '<domain_name>'

guest_id: '<guestID>'

guest_memory: '<RAM>'

guest_vcpu: '<CPU_cores>'

guest_template: '<template_name>'Define the vms we want to deploy. Sample vms-to-deploy:

[prod-k8s-master]

prod-k8s-master01 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Master #1'

prod-k8s-master02 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Master #2'

prod-k8s-master03 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Master #3'

[prod-k8s-workers]

prod-k8s-worker01 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Worker #01'

prod-k8s-worker02 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Worker #02'

prod-k8s-worker03 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Worker #03'

[prod-k8s-harbor]

prod-k8s-harbor01 deploy_vsphere_datastore='ESX2' guest_custom_ip='<ip>' guest_notes='Harbor #01'Define the playbook. Sample deploy-k8s-vms-prod.yml:

---

- hosts: all

gather_facts: false

vars_files:

- answerfile.yml

roles:

- deploy-vsphere-templateLet’s deploy the vms:

ansible-playbook -vv -i vms-to-deploy deploy-k8s-vms-prod.yml

STEP 2

Deploy the k8s cluster on top of the created vms.

sudo pip install -r requirements.txt

# copy the sample inventory

cp -rfp inventory/sample inventory/mycluster

# declare all the k8s cluster IPs (masters and workers only)

declare -a IPS=(x.x.x.x y.y.y.y z.z.z.z)

# see below samples before running this cmd

CONFIG_FILE=inventory/mycluster/hosts-prod.yml python3 contrib/inventory_builder/inventory.py ${IPS[@]}sample hosts-prod.yaml:

all:

hosts:

prod-k8s-master01:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

prod-k8s-master02:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

prod-k8s-master03:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

prod-k8s-worker01:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

prod-k8s-worker02:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

prod-k8s-worker03:

ansible_host: <ip>

ip: <ip>

access_ip: <ip>

children:

kube-master:

hosts:

prod-k8s-master01:

prod-k8s-master02:

prod-k8s-master03:

kube-node:

hosts:

prod-k8s-worker01:

prod-k8s-worker02:

prod-k8s-worker03:

etcd:

hosts:

prod-k8s-master01:

prod-k8s-master02:

prod-k8s-master03:

k8s-cluster:

children:

kube-master:

kube-node:

calico-rr:

hosts: {}sample inventory.ini (make sure the inventory file contains the vm’s proper name (ie. the ones defined under “ansible-deploy-vmware-vm/vms-to-deploy”))

# ## Configure 'ip' variable to bind kubernetes services on a

# ## different ip than the default iface

# ## We should set etcd_member_name for etcd cluster. The node that is not a etcd member do not need to set the value, or can set the empty string value.

[all]

# node1 ansible_host=95.54.0.12 # ip=10.3.0.1 etcd_member_name=etcd1

# node2 ansible_host=95.54.0.13 # ip=10.3.0.2 etcd_member_name=etcd2

# node3 ansible_host=95.54.0.14 # ip=10.3.0.3 etcd_member_name=etcd3

# node4 ansible_host=95.54.0.15 # ip=10.3.0.4 etcd_member_name=etcd4

# node5 ansible_host=95.54.0.16 # ip=10.3.0.5 etcd_member_name=etcd5

# node6 ansible_host=95.54.0.17 # ip=10.3.0.6 etcd_member_name=etcd6

# ## configure a bastion host if your nodes are not directly reachable

# bastion ansible_host=x.x.x.x ansible_user=some_user

[kube-master]

# node1

# node2

[etcd]

# node1

# node2

# node3

[kube-node]

# node2

# node3

# node4

# node5

# node6

[k8s-cluster:children]

kube-master

kube-nodeRun the playbook to create the cluster:

ansible-playbook -vvv -i inventory/mycluster/hosts-prod.yml --become --become-user=root cluster.yml

STEP 3

MetalLB is a bare-metal load balancer for k8s that makes your current network extend into your k8s cluster.

Connect to one of the master nodes over ssh and setup your environment:

source <(kubectl completion bash) # or zshDeploy metalLB by performing the following on one of the master nodes:

kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.8.3/manifests/metallb.yamlMetalLB remains idle until configured. As such, we need to define an IP range the k8s cluster can use and that is outside of any DHCP pool.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- <ip_range_start>-<ip_range_end>

EOFCheck the status of the pods with:

kubectl -n metallb-system get po

STEP 4

Install prometheus, grafana and alertmanager:

git clone https://github.com/coreos/kube-prometheus.git

cd kube-prometheus

kubectl create -f manifests/setup

until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo ""; done

kubectl create -f manifests/

# To teardown the stack:

#kubectl delete -f manifests/In order to access the dashboards via the LoadBalancer IPs, we need to change a few service types from “ClusterIP” to “LoadBalancer”:

kubectl -n monitoring edit svc prometheus-k8s

kubectl -n monitoring edit svc grafana

kubectl -n monitoring edit svc alertmanager-main

# Get the external IP for the edited services

kubectl -n monitoring get svcIn order to access the web interface of these services, use the following ports:

– grafana -> 3000 (default usr/pass is admin/admin)

– prometheus -> 9090

– alertmanager -> 9093

STEP 5

Installing the metrics-server or how to get “kubectl top nodes” and “kubectl top pods” to work.

git clone https://github.com/kubernetes-incubator/metrics-server.git

cd metrics-server

kubectl create -f deploy/1.8+/

kubectl top nodes

kubectl top pods --all-namespaces

STEP 6

Installing Harbor with clair and notary support.

ssh to your harbor vm and install docker and docker-compose:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

sudo apt-get update && sudo apt-get install -y docker-ce

sudo curl -L "https://github.com/docker/compose/releases/download/1.25.3/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

# allow users to use docker without administrator privileges

sudo usermod -aG docker $USERLogout and login and check docker:

docker infoTime to generate SSL certificated:

# generate a certificate authority

openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 3650 -out ca.crt

# generate a certificate signing request

openssl req -newkey rsa:4096 -nodes -sha256 -keyout prod-k8s-harbor01.lab.local.key -out prod-k8s-harbor01.lab.local.csr

# Create a configuration file for the Subject Alternative Name.

vim extfile.cnf

subjectAltName = IP:<ip>

# generate the certificate

openssl x509 -req -days 3650 -in prod-k8s-harbor01.lab.local.csr -CA ca.crt -CAkey ca.key -CAcreateserial -extfile extfile.cnf -out prod-k8s-harbor01.lab.local.crt

# copy the certificate to /etc/ssl/certs.

sudo cp *.crt *.key /etc/ssl/certsDownload and install Harbor:

wget https://github.com/goharbor/harbor/releases/download/v1.9.4/harbor-online-installer-v1.9.4.tgz

tar xvzf harbor-online-installer-v1.9.4.tgz

cd harborEdit harbor.yml and change a few options:

hostname: <harbor_ip>

# http related config

http:

port: 80

https:

port: 443

certificate: /etc/ssl/certs/prod-k8s-harbor01.lab.local.crt

private_key: /etc/ssl/certs/prod-k8s-harbor01.lab.local.key

harbor_admin_password: <your_admin_pass>Finally, install Harbor, enabling clair and notary:

sudo ./install.sh --with-notary --with-clairConfigure the docker daemon on each of your worker nodes and

export harbor_ip=<harbor_local_ip>

declare -a IPS=(<k8s_master1> <k8s_master2> <k8s_master3>)

for i in ${IPS[@]}; do

scp ../ca.crt $i:

ssh $i "sudo mkdir -p /etc/docker/certs.d/$harbor_ip && \

sudo mv ca.crt /etc/docker/certs.d/$harbor_ip/ && \

sudo systemctl restart docker"

doneLogin to a k8s master node and create a secret object for harbor:

kubectl create secret docker-registry harbor \

--docker-server=https://<harbor_ip> \

--docker-username=admin \

--docker-email=admin@claud-computing.net \

--docker-password='<your_harbor_admin_password>'Login to the harbor web-interface and create a new repository called “private”.

To deploy images to Harbor, we need to pull them, tag them and push them. From the harbor machine (or any other that has the certificates in place as above), perform the following:

docker pull gcr.io/kuar-demo/kuard-amd64:1

docker tag gcr.io/kuar-demo/kuard-amd64:1 <harbor_ip>/private/kuard:v1

docker login <harbor_ip>

docker push <harbor_ip>/private/kuard:v1Deploy the kuard app on k8s. ssh to a node where you have access to the cluster and create kuard-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuard-deployment

labels:

app: kuard

spec:

replicas: 1

selector:

matchLabels:

run: kuard

template:

metadata:

labels:

run: kuard

spec:

containers:

- name: kuard

image: <harbor_ip>/private/kuard:v1

imagePullSecrets:

- name: harborApply it.

kubectl create -f kuard-deployment.yaml

kubectl get po

STEP 7

Signing docker images with Notary. Install it first:

sudo wget https://github.com/theupdateframework/notary/releases/download/v0.6.1/notary-Linux-amd64 -O /usr/local/bin/notary

sudo chmod +x /usr/local/bin/notaryIf needed, copy the “ca.crt” to the client machine you’re working from.

Check if you can connect to the harbor server:

openssl s_client -connect <harbor_ip>:443 -CAfile /etc/docker/certs.d/<harbor_ip>/ca.crt -no_ssl2You should get something like this:

CONNECTED(00000005)depth=1 C = EU, ST = Example, L = Example, O = Example, OU = Example, CN = ca.example.local, emailAddress = root@ca.example.localverify return:1depth=0 C = EU, ST = Example, O = Example, CN = notary-server.example.localverify return:1---Certificate chain0 s:/C=EU/ST=Example/O=Example/CN=notary-server.example.locali:/C=EU/ST=Example/L=Example/O=Example/OU=Example/CN=ca.example.local/emailAddress=root@ca.example.localNow pull an image from docker hub and tag it but don’t push it just yet.

docker pull nginx:latest

docker tag nginx:latest <harbor_ip>/private/nginx:latestLet’s enable the Docker Content Trust and then push the image. Please note that when first pushing a signed image, you will be asked to create a password.

export DOCKER_CONTENT_TRUST_SERVER=https://<harbor_ip>:4443 DOCKER_CONTENT_TRUST=1

docker login <harbor_ip>

docker push <harbor_ip>/private/nginx:latest

unset DOCKER_CONTENT_TRUST_SERVER DOCKER_CONTENT_TRUSTOk, so now the Docker image is pushed in our Registry server and it is signed by the Notary server. We can verify

notary --tlscacert ca.crt -s https://<harbor_ip>:4443 -d ~/.docker/trust list <harbor_ip>/private/nginxTest that you can pull from docker hub and harbor:

docker pull nginx

docker pull <harbor_ip>/private/nginx:1.16.0For using a completely private repository, leave the “DOCKER_CONTENT_TRUST_SERVER” and the “DOCKER_CONTENT_TRUST” environment variables set, on all the k8s cluster machines.

STEP 8

Clair is an open source project for the static analysis of vulnerabilities in application containers. To enable it go to the Harbor web-interface and click on “vulnerability” -> “edit” and select the scan frequency. Save and also click “scan now” if it’s your first time doing this.